Tutorial - LlamaIndex

Let's use LlamaIndex , to realize RAG (Retrieval Augmented Generation) so that an LLM can work with your documents!

What you need

-

One of the following Jetson devices:

Jetson AGX Orin 64GB Developer Kit Jetson AGX Orin (32GB) Developer Kit Jetson Orin Nano 8GB Developer Kit

-

Running one of the following versions of JetPack :

JetPack 5 (L4T r35.x) JetPack 6 (L4T r36.x)

-

NVMe SSD highly recommended for storage speed and space

-

5.5 GBforllama-indexcontainer image - Space for checkpoints

-

-

Clone and setup

jetson-containers:git clone https://github.com/dusty-nv/jetson-containers bash jetson-containers/install.sh

How to start a container with samples

Use

run.sh

and

autotag

script to automatically pull or build a compatible container image.

jetson-containers run $(autotag llama-index:samples)

The container has a default run command (

CMD

) that will automatically start the Jupyter Lab server.

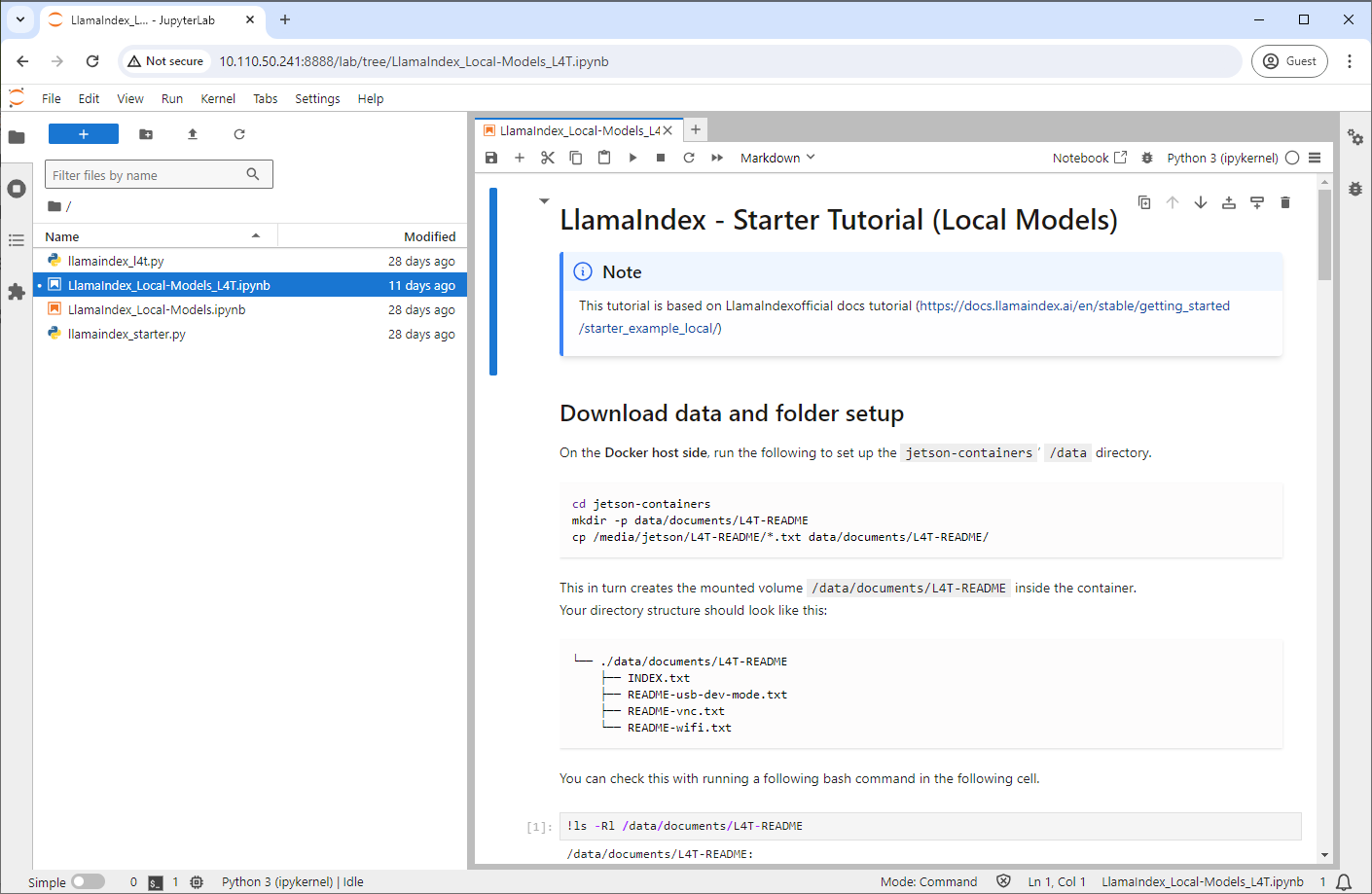

Open your browser and access

http://<IP_ADDRESS>:8888

.

The default password for Jupyter Lab is

nvidia.

You can follow along

LlamaIndex_Local-Models_L4T.ipynb

(which is based on the official LlamaIndex

tutorial

).